Which of these processes is spontaneous? a. the combustion of natural gas b. the extraction of iron metal from iron ore c. a hot drink cooling to room temperature d. drawing heat energy from the ocean's surface to power a ship

Two systems, each composed of three particles represented by circles, have 30 J of total energy. How many energetically equivalent ways can you distribute the particles in each system? Which system has greater entropy?

Verified step by step guidance

Verified step by step guidance

Verified video answer for a similar problem:

Key Concepts

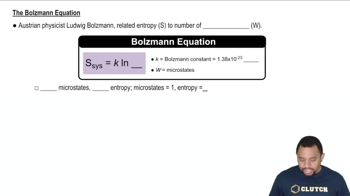

Statistical Mechanics

Entropy

Microstates and Macrostates

Which of these processes are nonspontaneous? Are the nonspontaneous processes impossible? a. a bike going up a hill b. a meteor falling to Earth c. obtaining hydrogen gas from liquid water d. a ball rolling down a hill

Two systems, each composed of two particles represented by circles, have 20 J of total energy. Which system, A or B, has the greater entropy? Why?

Calculate the change in entropy that occurs in the system when 1.00 mole of isopropyl alcohol (C3H8O) melts at its melting point (-89.5 °C). See Table 12.9 for heats of fusion.

Calculate the change in entropy that occurs in the system when 1.00 mole of diethyl ether (C4H10O) condenses from a gas to a liquid at its normal boiling point (34.6 °C). See Table 11.7 for heats of vaporization.

Without doing any calculations, determine the sign of ΔSsys for each chemical reaction. a. 2 KClO3(s) → 2 KCl(s) + 3 O2(g) c. Na(s) + 2 Cl2(g) → NaCl(s) d. N2(g) + 3 H2(g) → 2 NH3(g)