Here are the essential concepts you must grasp in order to answer the question correctly.

Entropy

Entropy is a measure of the disorder or randomness in a system. In thermodynamics, it quantifies the number of microscopic configurations that correspond to a thermodynamic system's macroscopic state. Higher entropy indicates a greater degree of disorder and a higher number of possible arrangements of particles.

Recommended video:

Entropy in Thermodynamics

Probability and Microstates

In statistical mechanics, the concept of microstates refers to the specific detailed configurations of a system at the molecular level. The probability of a system being in a particular macrostate is related to the number of microstates associated with that macrostate. A state with more microstates has a higher probability of being realized, leading to higher entropy.

Recommended video:

Most Probable Speed Example

Order vs. Disorder in Solids

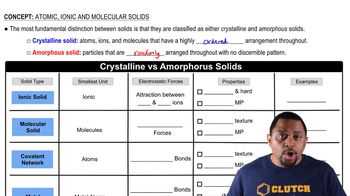

The arrangement of particles in solids can significantly affect their entropy. A perfectly ordered solid, like a crystal, has fewer microstates compared to a disordered solid, where particles are arranged randomly. This difference in arrangement leads to the disordered solid having higher entropy due to its greater number of possible configurations.

Recommended video:

Crystalline vs Amorphous Solids